- If there are sequences x,y

- Encoder is any Recurrent with a forward state hT and hT for backward

- Concat them represents the preceding and following word annotations

- hi=[hiT;hiT], i=1,…,n

- Decoder has hidden state st=f(st−1,yt−1,ct) for the output word at position t for t=1,…,m

- Context vector ct is a sum of hidden states of the input seq, weighted by alignment scores

- ct=Σi=1nαt,ihi

- How well the two words are aligned is given by

- αt,i=align(yt,xi)

- Taking Softmax

- Σi′−1nexp(score(st−1,hi′))exp(score(st−1,hi))

- fatt(hi,sj)=vaTtanh(Wa[hi;sj])

- va and Wa are the learned Attention params

- h is the hidden state for the encoder

- s is the hidden state for the decoder

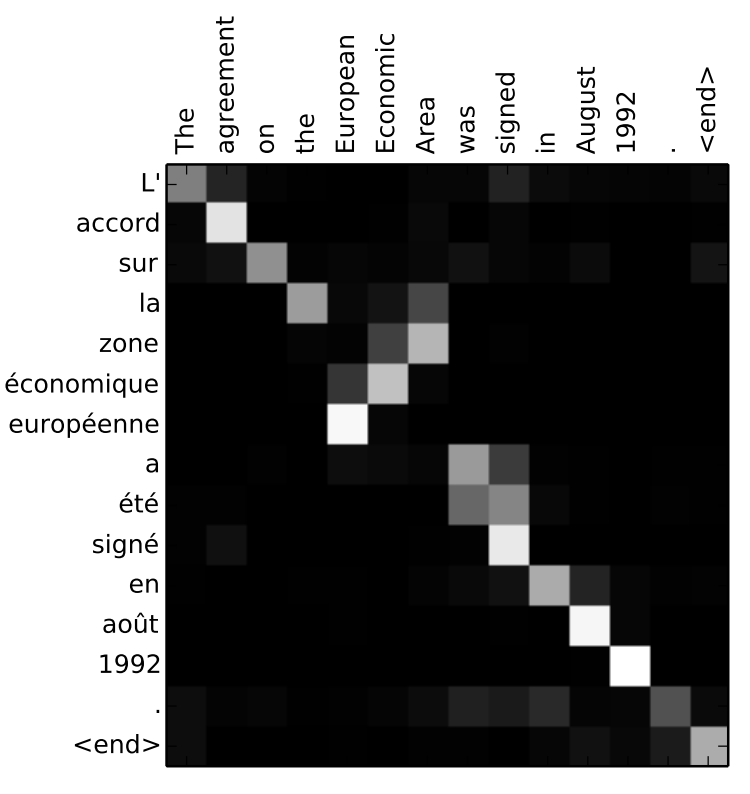

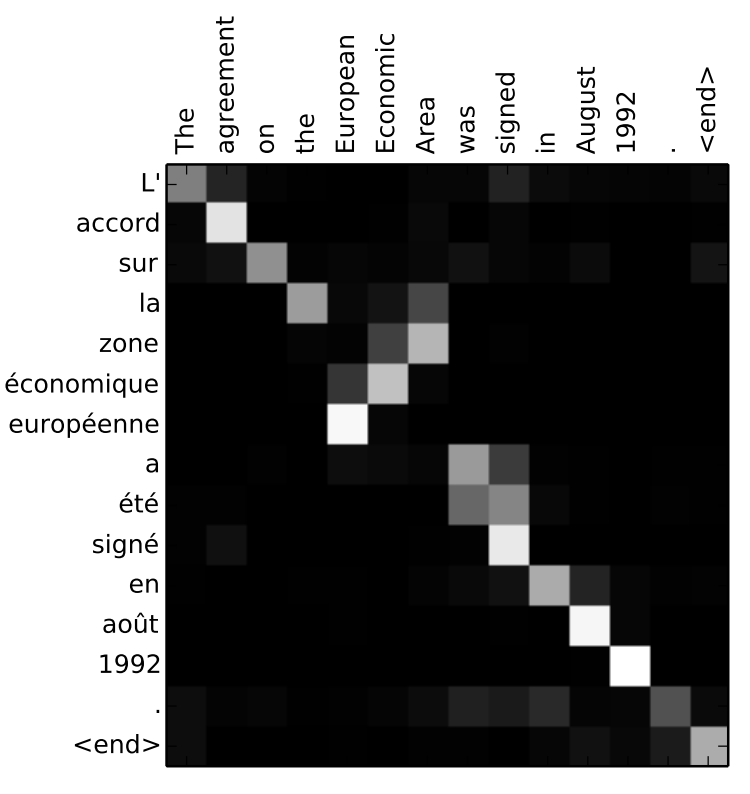

- Matrix of alignment

- Final scores calculated with a Softmax