Batch Normalization

- bias=False for Linear/Conv2D for input and True for outputdeeplearning

- Normalizesarchitecture

- Input Distributions change per layer → Make sure they stay similar

- Reduces co variate shift because now the network must adapt per layer

- During testing : use stats saved during training

- Simplifies learning dynamics

- Can use larger learning rate

- Higher order interactions are suppressed because the mean and std are independant of the activations which makes training easier

- Cant work with small batches. Not great with RNN

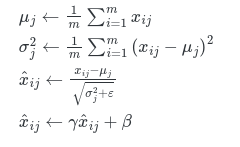

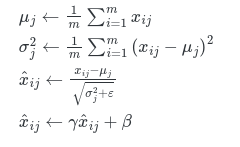

- μj←m1Σi=1mxij

- σj2←m1Σi=1m(xij−μj)2

- x^ij←σj2+ϵxij−μj

- x^ij←γx^ij+β

Why