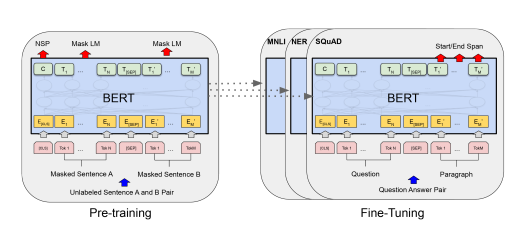

BERT

- Bidirectional Encoder rep from transformers

- Uses Token Embedding

- Self Supervised

- Masked language modeling, next sentence prediction

- [CLS] : start of classification task, [SEP] between sentences, [MASK] : masked token

- christianversloot #Roam-Highlights

-

BERT base , which has 12 Encoder Segments stacked on top of each other, has 768-dimensional intermediate state, and utilizes 12 Attention heads (with hence 768/12 = 64-dimensional Attention heads).

-

BERT large (), which has 24 Encoder Segments, 1024-dimensional intermediate state, and 16 Attention heads (64-dimensional Attention heads again).

-

BERT utilizes the encoder segment, meaning that it outputs some vectors for every token. The first vector, , is also called in the BERT paper: it is the “class vector” that contains sentence-level information (or in the case of multiple sentences, information about the sentence pair). All other vectors are vectors representing information about the specific token.

-

In other words, structuring BERT this way allows us to perform sentence-level tasks and token-level tasks. If we use BERT and want to work with sentence-level information, we build on top of the token.

-

Next Sentence Prediction (NSP)

- This task ensures that the model learns sentence-level information. It is also really simple, and is the reason why the BERT inputs can sometimes be a pair of sentences. NSP involves textual entailment, or understanding the relationship between two sentences.

- Constructing a training dataset for this task is simple: given an unlabeled corpus, we take a phrase, and take the next one for the 50% of cases where BERT has a next sentence. We take another phase at random given A for the 50% where this is not the case (Devlin et al., 2018). This way, we can construct a dataset where there is a 50/50 split between ‘is next’ and ‘is not next’ sentences.

-

It thus does not matter whether your downstream task involves single text or text pairs: BERT can handle it.

- Sentence pairs in paraphrasing tasks.

- Hypothesis-premise pairs in textual entailment tasks.

- Question-answer pairs in question answering.

- Text-empty pair in text classification.

-

Yes, you read it right: sentence B is empty if your goal is to fine-tune for text classification. There simply is no sentence after the token.

-

Fine-tuning is also really inexpensive

-