Chapter 4 - Deep Neural Networks

Intro

- Deep networks : more than one hidden layer

- Both deep and shallow describe piecewise linear mappings from input → output

- A shallow network can describe complex functions but might have too many hidden layers to be practically possible to use.

Why did deep learning take off

- Krizhevsky et al. (2012) aka ImageNet

- Larger datasets

- Improved processing power for training

- Relu

- SGD

Composing networks

General deep neural networks

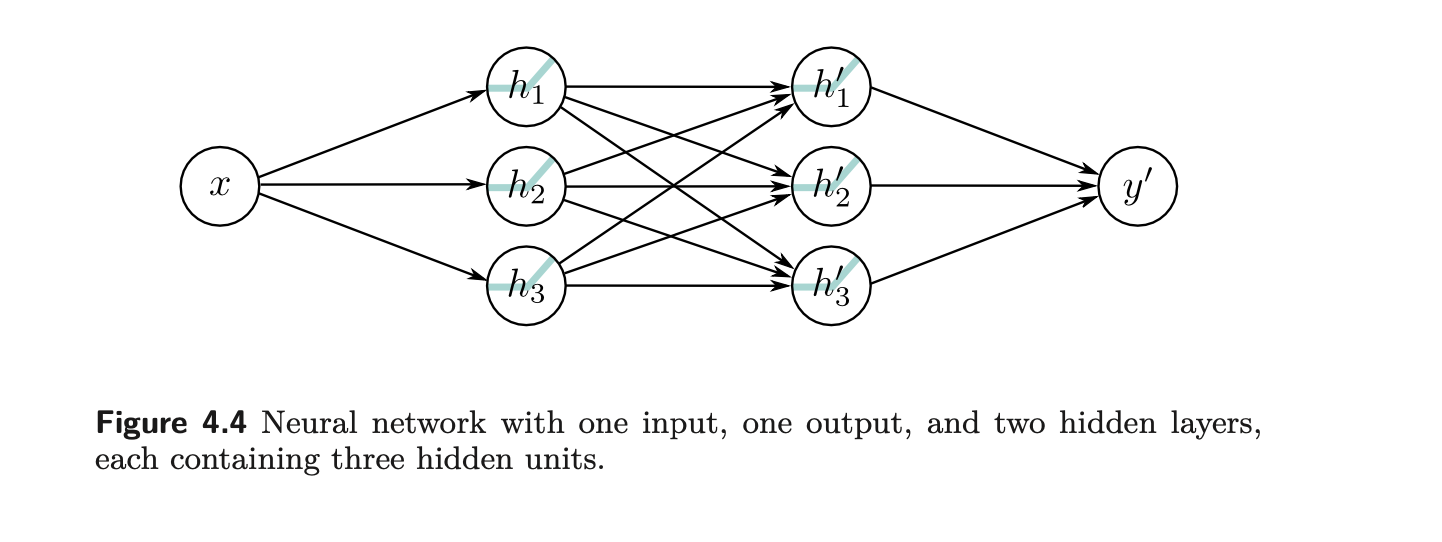

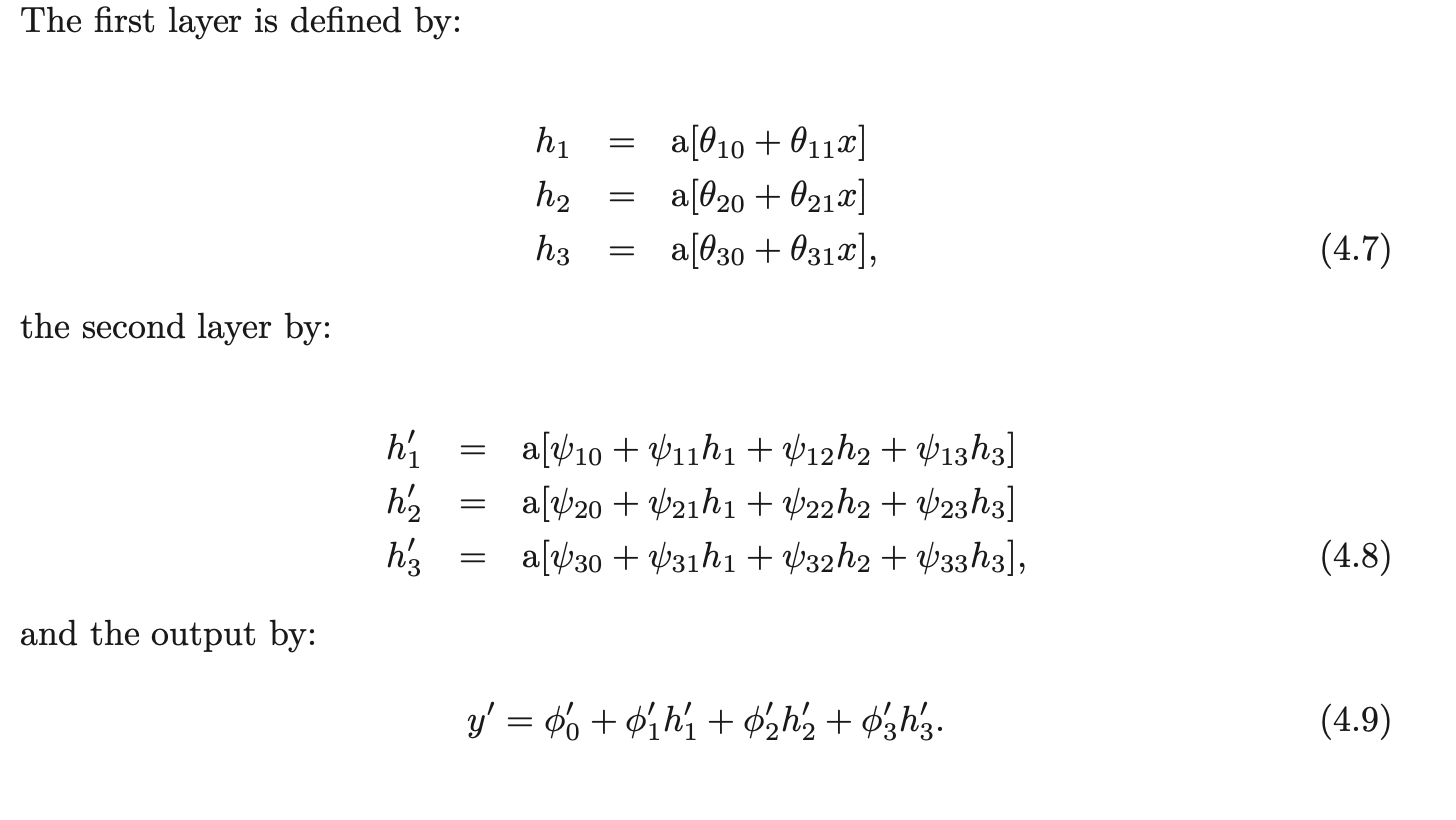

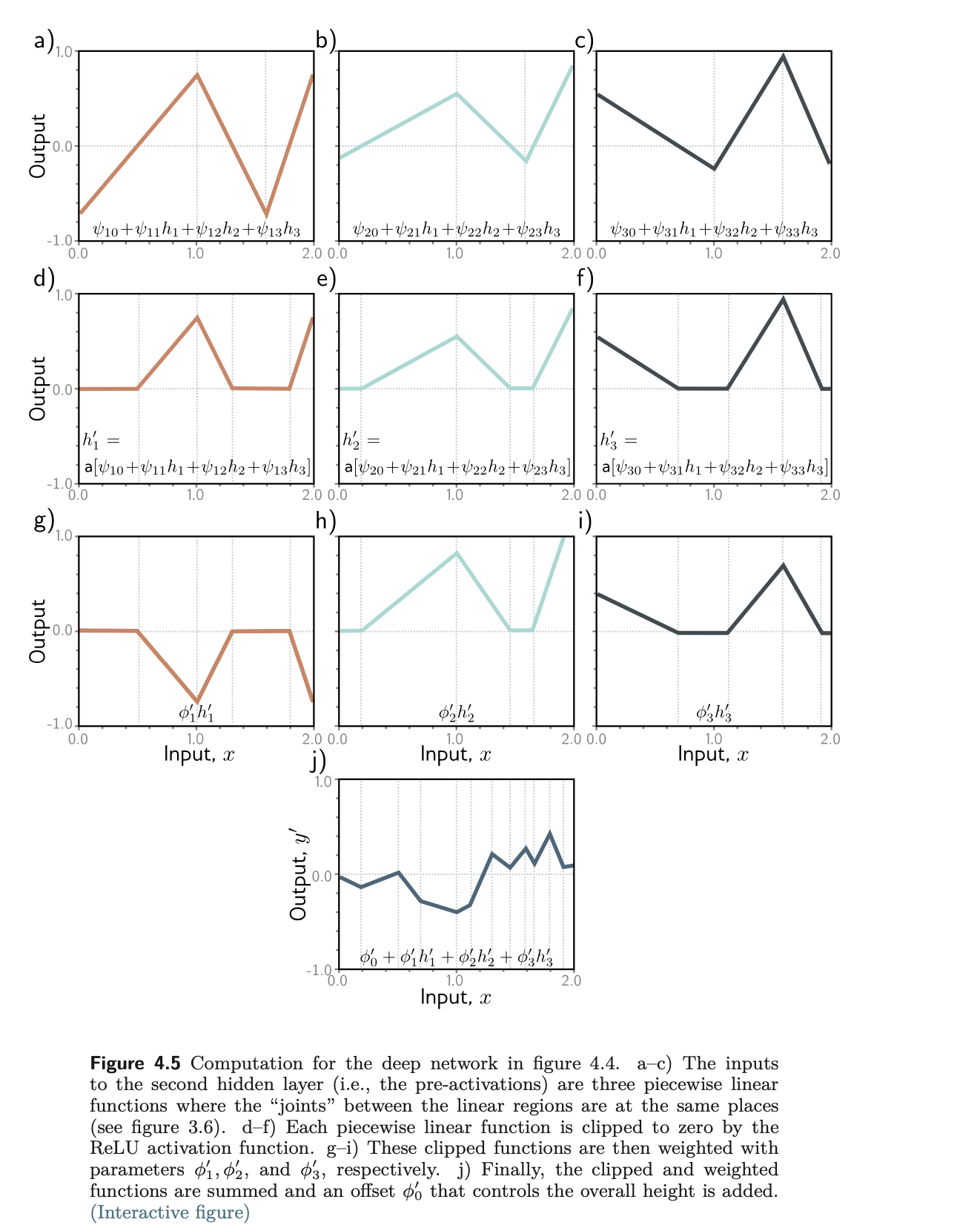

- Consider a deep network with 2 hidden layers, each of which has 3 hidden units

How does the network deal with complicated functions?

Hyperparameters

- Capacity : total number of hidden units

- NNs represent a family of families of functions relating input to output

Width

- Number of hidden units in each layer (D1,D2,...,DK)

Depth

- Number of hidden layers (K)

Matrix notation to represent deep networks

Shallow vs deep networks

Other notes