PCA

- m dim affine hyperplace spanned by first m eigenvectors. Only manifolds and no codebook vectors

- Be able to reconstruct x from f(x) : decoding function x≈d∘f(x)

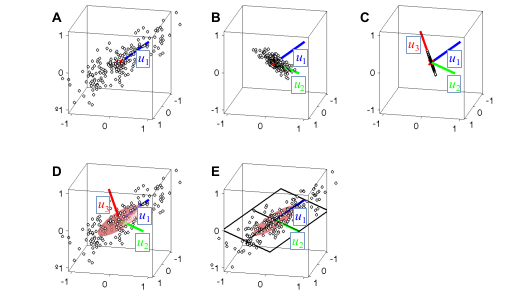

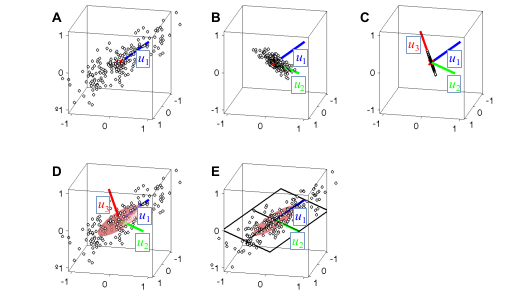

Steps

- Center data (A)

- Subtract their mean from each pattern.

- μ=N1Σixi and getting patterns x^i=xi−μ

- Point Cloud with center of Gravity : origin

- Extend more in some “directions” characterized by unit norm direction vectors u∈Rn .

- Distance of a point from the origin in the direction of u : projection of xˉi on u aka inner product u′xˉi

- Extension of cloud in direction u : Mean square dist to origin.

- Largest extension : u1=argmaxu1,∣∣u∣∣=1N1Σi(u′xˉi)2

- Since centered: mean is 0 and N1Σi(u′xˉi)2 is the variance

- u1 is the longest direction : First PC : PC1

- Project points (B)

- Find orthogonal (90deg) subspace . (n-1) dim linear

- Map all points xˉ to xˉ∗=xˉ−(u′xˉi∗)2- Second PC : PC2

- Rinse and repeat (C)

- New PCs plotted in original cloud (D)

- For featurres fk:Rn→R , x→uk′xˉ

- Reconstruction : x=μ+Σk=1,…,nfk(x)uk

- First few PCs till index m

- (f1(x),…,fm(x))′

- Decoding function d:(f1(x),…,fm(x))′→μ+Σk=1fk(x)uk

- How good is the reconstruction

- Σk=m+1n(N1Σifk(xi)2)

- Relative amount of dissimilarity to mean empirical variance of patterns - 1

- Σk=1n(N1Σifk(xi)2)Σk=m+1n(N1Σifk(xi)2)

- Ratio very small as index k grows. Very little info lost by reducing dims. Aka good for very high dim stuff.

- Compute SVD

- u1,…un form orthonormal, real eigenvectors

- variances σ12,…,σn2 are eigenvalues

- C=UΣU′ to get PC vectors uk lined up in U and variances σk2 as eigenvalues in Σ

- If we want to preserve 98% variance : Rhs of (1) st. ratio is (1-0.98)