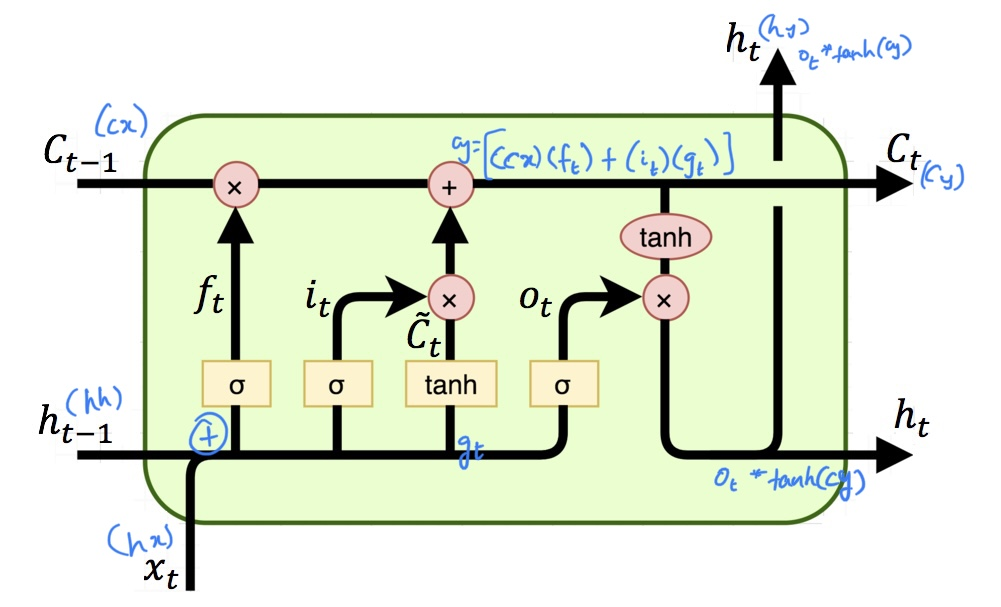

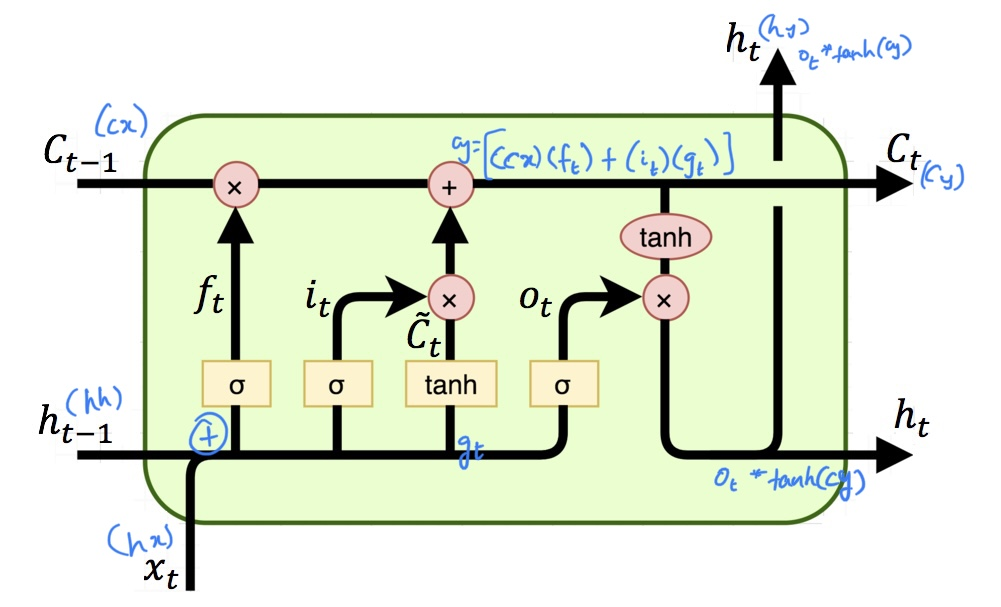

Long Short Term Memory (LSTM)

- Smaller chance of exploding or vanishingarchitecture

- Better ability to model long term dependencies

- Gated connections

- Gates that learn to forget some aspects, and remember others better

- Splitting state into parts → output pred and Feature Learning

- At the end of the day, these could not handle too long sequences. Therefore → Transformer

The Math

- Gates

- Forget gf=σ(Whfht−1+Wxfxt+bf)

- How much of the previous cell state is used

- Input gi=σ(Whiht−1+Wxixt+bi)

- How proposal is added to the state

- Output go=σ(Whoht−1+Wxoxt+bo)

- Hidden state

- Ct to model cross timestep dependencies

- Cell state proposal : C^=tanh(Whcht−1+Wxcxt+bc)

- Final cell state : Ct=gf⋅Ct−1+gi⋅C^

- ht to predict output