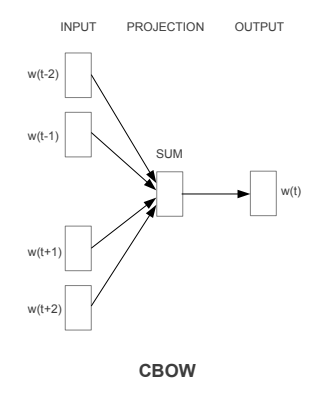

CBOW

- Continous implementation of Bag of words

- tries to predict the current target word (the center word) based on the source context words (surrounding words)

- “the quick brown fox jumps over the lazy dog”, this can be pairs of (context_window, target_word) where if we consider a context window of size 2, we have examples like ([quick, fox], brown), ([the, brown], quick), ([the, dog], lazy) and so on

- context window

- several times faster to train than the skip-gram, slightly better accuracy for the frequent words.

- CBOW is prone to overfit frequent words because they appear several time along with the same context.

- tends to find the probability of a word occurring in a context

- it generalizes over all the different contexts in which a word can be used

- also a 1-hidden-layer neural network

- The synthetic training task now uses the average of multiple input context words, rather than a single word as in skip-gram, to predict the center word.

- Again, the projection weights that turn one-hot words into averageable vectors, of the same width as the hidden layer, are interpreted as the word embeddings.