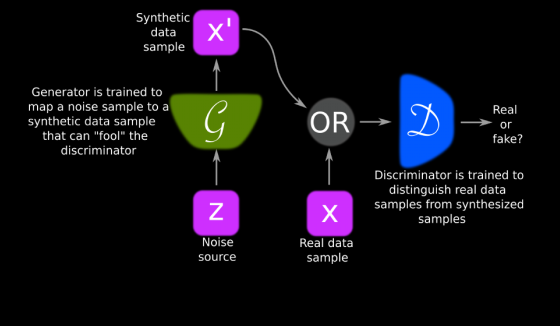

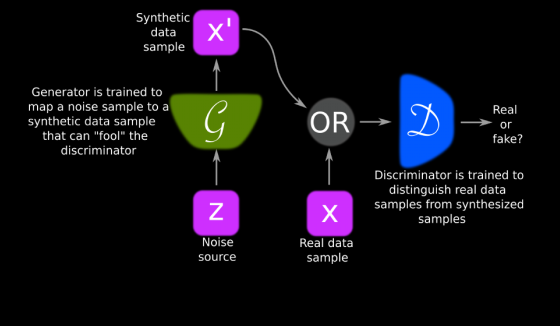

Basic GAN

- Generative Adversarial Networks

- Learn a prob distribution directly from data generated by that distribution

- no need for any Markov Chain or unrolled approximate inference networks during either training or generation of samples

- Adversarial Learning

- Min Max game maxDminGV(G,D) where V(G,D)=Epdata(x)logD(x)+Epdata(x)log(1−D(x))

- G : Gradient Descent

- D : Gradient Ascent

- Discriminator Loss (Given Generator)

- Ldisc(Dθ)=−21(Ex∼preal(x)[log(Dtheta(x))]+Ex∼platent(x)[log(1−Dθ(Gϕ(z)))])

- Generator Loss (Given Discriminator)

- Lgen(Gϕ)=−Ez∼platent(z)[log(Dθ(Gϕ(z)))]

- This is low if Discriminator is fooled by Gen, Dθ(xgen)≈1

Training

- pick mini batch of samples

- update discriminator with Gradient Descent based** on discriminator loss with generator obtained from previous update

- update the generator with Gradient Descent based on generator loss with the discriminator from the previous step