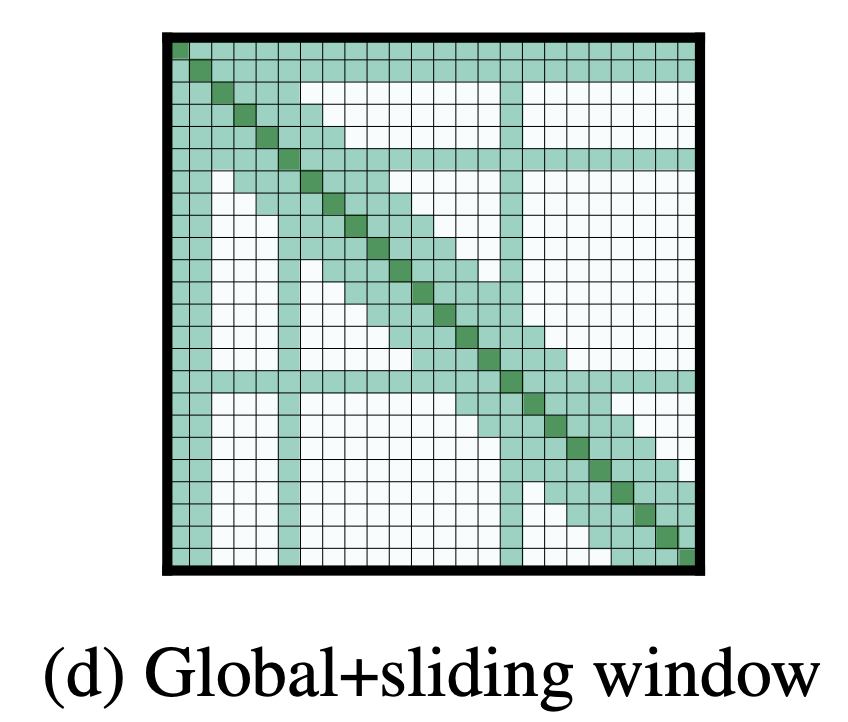

Global and Sliding Window Attention

- Sliding Window Attention and Dilated Sliding Window Attention are not always enough

- global Attention” on few pre-selected input locations.

- This Attention is operation symmetric: that is, a token with a global Attention attends to all tokens across the sequence, and all tokens in the sequence attend to it